Antenna SQL Access

Antenna SQL Overview

Beeswax gives customers access to a private data warehouse to query and utilize raw log data without requiring complex and costly ETL processes. Antenna SQL access can be Beeswax- or customer-hosted.

Views

| View Name | Log Representation | Delay | Table Retention |

|---|---|---|---|

shared_attributed_conversions_view | Attributed Conversions | 5 Hours | 385 days |

shared_attributed_conversions_ip_address_view | IP Attributed Conversions | 5 Hours | 385 days |

shared_bids_view | Bid Logs | 1 Hour | 30 days |

shared_bids_response_feedback_view | Loss Logs | 1 Hour | 30 days |

shared_conversions_view | Conversion Logs | 3 Hours | 385 days |

shared_impression_details_view | Win logs | 1 Hour | 385 days |

Joining Datasets

For the purposes of your analysis, it may be useful to join tables together. All of your provided tables contain a column called auction_id. This is Beeswax’s proprietary assigned auction ID and can be used as a join key to join any of these tables together.

For example, to join your wins with the details about the associated attributed conversions, you could write a query like the following:

SELECT ...

FROM antenna.shared_impression_details_view i

LEFT JOIN antenna.shared_attributed_conversions_view c

ON i.auction_id = c.auction_id

This query will return all impression rows, and any rows that had a matching attributed conversion. Note that in the above example, rows from the impression details table may be duplicated because the relationship between impressions and attributed conversions is one-to-many.

Available Fields

To view the available fields for querying in Antenna, see the publicly accessible schema files on Github here.

Monitoring Your Credit Usage

Credits

When querying using a Snowflake Warehouse you will spend Snowflake credits for the compute costs of running queries. The more complex and computationally taxing a query is, the more credits a query will consume. Credits are charged per second of active warehouse time, i.e., at least one query is running. Larger warehouses will run through queries more quickly, but also will spend more credits. You can find more information around re-sizing warehouses in Snowflake’s Documentation, or in the section on Working with Warehouses below.

At writing, hourly credit consumption by warehouse size is the following:

| X-Small | Small | Medium | Large | X-Large | 2XL | 3XL | 4XL |

|---|---|---|---|---|---|---|---|

| 1 | 2 | 4 | 8 | 16 | 32 | 64 | 128 |

Warehouses can be set to auto-suspend after a certain amount of time with no queries running. The minimum for this time threshold is 60 seconds, meaning each query will incur at least 60 seconds of credit consumption.

Credit consumption is more crucial in a Beeswax-hosted instance, as Beeswax will bill customers for their Antenna-related credit consumption. Customers hosting Antenna in their own Snowflake account will be billed for credits consumed on their own organization's Snowflake bill, and should follow their own organization’s policies around credit consumption.

Monitoring Tools

Beeswax-hosted Antenna customers should be able to see an icon on that top with "Account" info:

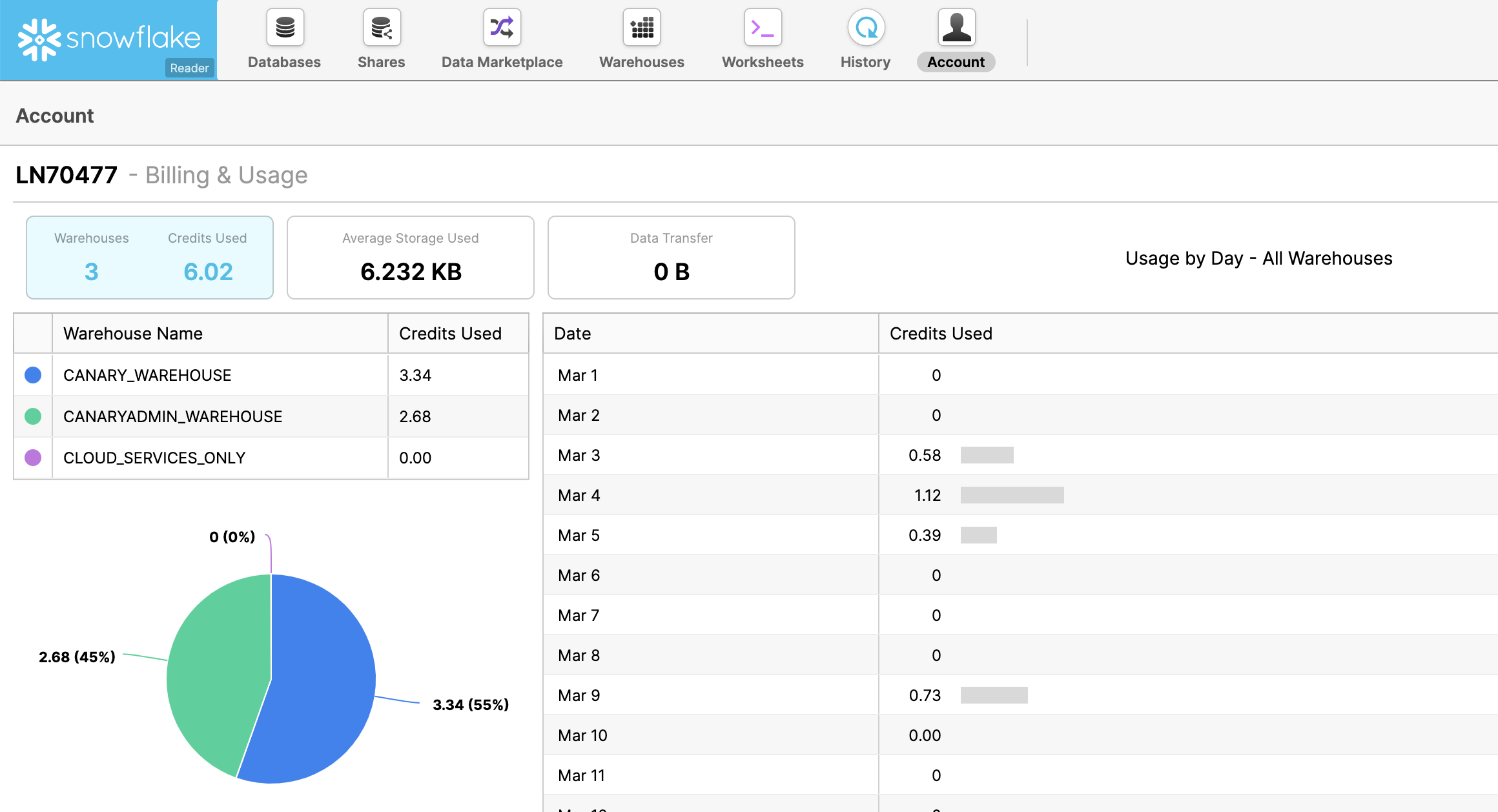

Clicking into this will provide insight into your credit use:

Note that there is also an “ADMIN” warehouse -- this may be used by the Beeswax Team to run troubleshooting queries on your behalf. You should not be able to see or query using this warehouse.

Alternatively, Snowflake stores credit consumption as a native table, and you can query it using this functionality.

Resource Monitors

Resource Monitors are a concept within Snowflake’s ecosystem that allow us to cap Antenna usage for both parties’ mutual benefit (you probably don’t want to accidentally run tons of expensive queries without knowing it).

Customers with a Beeswax-hosted Antenna instance are configured with a 500 Account-wide credit cap (~$900) that is refreshed monthly. This cap can be lifted with approval by the Beeswax team.

Tips on Efficiently Querying Your Data

As the scale of some of these datasets can be quite large, optimizing your queries can be a smart way to control your credit use.

Date Filters

Beeswax highly recommends using date filters with your queries for optimal performance, this will dramatically reduce the number of data scanned by Snowflake.

In most tables, you will find a bid_time_utc column which represents the time of the corresponding Bid Request for the event in question. Some tables will also contain an rx_time_utc column. This represents the time of receipt for the event in questions.

We recommend filtering most of your queries on one of these columns for optimal performance of queries.

Using Dates with Joined Tables

If you opt to join multiple views together, it is recommended you apply a date filter on both tables being joined to improve performance. For example, the sample query above section should become:

SELECT ...

FROM antenna.shared_impression_details_view i

LEFT JOIN antenna.shared_attributed_conversions_view c

ON i.auction_id = c.auction_id

WHERE i.bid_time_utc >= ‘2020-03-01 00:00:00’

AND c.bid_time_utc >= ‘2020-03-01 00:00:00’

Note on Bids and Bid Response View

For customer-friendliness, these views are generated by joining multiple tables together under the hood.

The data joined together is Bid Requests (Auctions) and Bid Responses (Bids), because an Auction can have multiple bids sent from your bidder. When the data is joined together, Bid Request data may be duplicated for each Bid Response. You should notice this in any query that is trying to calculate unique auctions being bid upon vs. unique total bids.

Similarly, as a result, each of these tables has multiple potential date filters. To optimize the query the view runs under the hood. The relevant columns to filter on are bid_request_bid_time_utc and bid_response_bid_time_utc. So, for example, a well-optimized query would look like the following:

SELECT *

FROM shared_bid_response_feedback_view

WHERE bid_response_bid_time_utc > '2020-03-08'

AND bid_request_bid_time_utc > '2020-03-08'

Limiting Results

If you do not need all results in a query, such as simply to preview results in a table, we recommend specifying a LIMIT clause to your query. Doing so, allows your query to exit early and will not need to process all rows before returning a result.

Strategically Use Computationally Draining Functions

When writing SQL certain keywords will be more computationally taxing on Snowflake, and as a result will incur more compute costs. In many cases, queries can be written without using these.

ORDERing Results

Ordering is sometimes a necessary part of an analysis, but ordering results requires all results to be processed before a result can be returned. Ordering can also be computationally complex especially when ordering on fields like string values. Ordering will also nullify any benefit of LIMIT-ing results.

Ordering is typically fine in most queries, but if it’s not a necessary part of your analysis, you will run more efficient queries with it omitted.

COUNT DISTINCTs

Sometimes it is necessary to get a count of distinct items in a set. When run across large datasets COUNT(DISTINCT ...) will consume large amounts of resources. If you can sacrifice exactness for approximation, Snowflake offers support for Approximate Distinct Counts via a HyperLogLog algorithm. You can use this with the HLL() or APPROX_COUNT_DISTINCT() SQL functions.

Working with Warehouses

Customers using the Beeswax-hosted version of Antenna have the ability to create and resize their own warehouses just as a full-featured Snowflake customer would. Warehouse size denotes an amount of dedicated compute resources for queries. By default Beeswax provisions a single Medium Warehouse for you, but you may create new ones.

Larger warehouses will consume more credits, but will also run queries more quickly. Depending on your use case, it may be worthwhile to create a larger warehouse for specific queries.

You can create a new Warehouse in the UI or via SQL commands. For example:

CREATE OR REPLACE WAREHOUSE my_wh

WITH

WAREHOUSE_SIZE = 'X-LARGE'

AUTO_SUSPEND = 60;

Note that new warehouses need to have an auto suspend policy set or they will only suspend after 10 minutes of inactivity. You are charged for non-idle time of a warehouse, and a warehouse will suspend after a specified amount of activity. When creating a new warehouse you will likely want to set this to a low value.

If you wish to resize a warehouse this can also be done via the UI or with SQL commands. Find more information on warehouses in Snowflake’s Documentation for Warehouses.

Snowflake SQL Documentation

Antenna SQL access is based on Snowflake. You can access Snowflake’s SQL Documentation here, and can assist you with most questions about queries.

Updated 12 months ago